The RedLine team has recently embarked on an ambitious cloud-based project, implementing the National Weather Service (NWS) Global Workflow. For this project the workflow will run several Global Data Assimilation System (GDAS) cycles. The GDAS system includes preprocessing codes, analysis codes, the Finite Volume Cubed Sphere Global Forecast System (FV3GFS) model and post-processing codes. Output from the global forecast is used to provide input data for the Global Ensemble Forecast System (GEFS) as well as regional and/or local forecast models. We will implement version 16 of the workflow, which is now the current operational workflow managed by NCEP Central Operations (NCO).

The availability of high-speed low-latency interconnects, low overhead CPU-dense VMs, and the ability to deploy parallel filesystems such as Lustre make running a complex weather model like the FV3GFS viable in the cloud. We’ve broken the project down into two distinct phases, starting with implementing and baselining a parallel filesystem, followed by cycling the global workflow in the cloud. This blog post focuses on phase1, building and deploying a parallel filesystem.

The project will utilize the Lustre parallel filesystem. Specific performance requirements for FV3 and the associated data assimilation have not yet been defined. Therefore, we will build out a relatively small filesystem and test its ability to scale assuming that we can adjust our filesystem to meet our performance objectives once we begin cycling the workflow. Ideally, a linear-scaling model for Lustre will permit flexibility in performance tuning the model and can help future-proof model upgrades (higher resolution, etc.)

Microsoft Azure CycleCloud will be used to create and manage the startup/shutdown of the Lustre filesystem, as well as a compute cluster to run the performance testing. The IOR performance benchmark will be used to test Lustre’s IO performance. Note that in all testing, there is equivalence in the number of Lustre OSS servers to the number of compute nodes performing the IOR tests.

For the baseline test, we start with a Lustre cluster consisting of 4 OSS servers and a single dedicated MDS/MGS server. On the compute side we’ll have a 4-node compute cluster to run our IOR tests. Provisioning for both the Lustre and compute clusters are performed with Microsoft Azure CycleCloud cluster provisioning tool.

The Lustre Cluster was built with 4 OSSes using Azure’s Intel-based Standard_D64ds_v4 and 1 MDS using Azure Standard_D32ds_v4, powered by Intel’s Xeon Platinum 8272CL (Cascade Lake) processor. These node types were selected for the balance of price to bandwidth and storage giving a total of 4 OSTs and a total of 9600 GB for the filesystem and 120 Gbps bandwidth.

| Lustre Node | Node Type | vCPUs | RAM | Storage | Network BW |

| OSS | Standard_D64ds_v4 | 64 | 256 | 2400 GB | 30 Gbps |

| MDS | Standard_D32ds_v4 | 32 | 128 | 1200 GB | 16 Gbps |

The Compute Cluster was built with 4 nodes using Azure’s High Performance compute node, Standard_HC44rs, powered by Intel’s Xeon Platinum 8168 (Skylake), which provides 44 vCPUs paired to 352 GB of RAM with EDR InfiniBand providing 100 Gbps bandwidth. These are the same compute nodes that we will utilize to run the Global Workflow.

Our testing protocol uses IOR to test parallel filesystem performance. For each run, we execute a script which pre-creates one file for each task and assigns that file to a specific OST in a round robin fashion. This process ensures that each task will write to a different OST. To ensure read tests don’t take advantage of client write-caching, we adjust -Q # to equal T+1, where T is the number of tasks per node which effectively shifts the read test to an adjacent node. Each task writes a 16 GB file with a 1 MB transfer size. Results are given in Mebibytes (not Megabytes) per second in read and write performance.

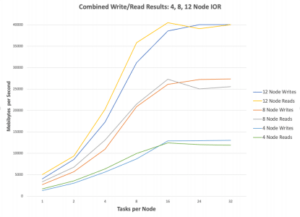

Our IOR tests are scaling tests showing how the storage solution and compute nodes react to increasing IO workloads.

In the 4 node compute to 4 Lustre OSS server tests, we can see the effect of increasing the number of tasks per node. In these tests, we see linear scaling of performance from 1 task per node thru 16 tasks per node. The tests generally plateau at 16 tasks per node, reaching approximately 12,900 MB/sec write and 12,500 MB/sec read performance. The purpose of pushing past the peak performance is to observe how the compute nodes and storage solution respond to over commitment of the storage solution or network link. Given the 30 Gbps network bandwidth limitation on each of the 4 OSS nodes, the solution is hitting its peak performance.

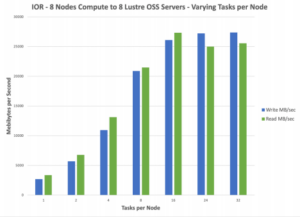

For our next test we will double the storage and compute resources to determine the scalability of our solution. This doubling increases the total compute nodes to 8 and Lustre OSS servers to 8, providing a total storage of 19,200 GB.

In the 8 node compute to 8 Lustre OSS server tests, we can see the effect of increasing the number of tasks per node. In these tests, we see linear scaling of performance from 1 task per node thru 16 tasks per node. These tests also plateau at 16 tasks per node, reaching approximately 26,000 MB/sec write and 27,000 MB/sec read performance. The similarities between the 4 node and 8 node series showing nearly identical patterns are promising.

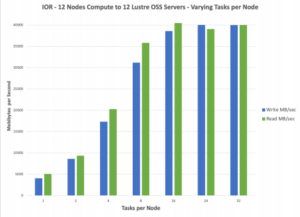

Lastly, by adding another four nodes, we confirm scaling capability and profile.

In the 12 node test, we see a familiar pattern, read IOs peaking at 16 tasks in a similar fashion as the 4 node and 8 node tests. The IO plateau is reached at the 16 node case and follows through the 24 task and 32 task per node cases. If we look at combined results across all three tests, we’ll see near-linear scaling occurring.

Based on the near linear scaling we’re seeing in our tests, we feel confident that the configuration we’ve defined for Lustre will meet our requirements for running the NWS Global Workflow in Azure. Our next step will be to begin running the Global Workflow in the cloud to validate our assumptions. Stay tuned for a follow up to this blog post on those results.